An AI system can be defined as the study of the rational agent and its environment. The agents sense the environment through sensors and act on their environment through actuators. An AI agent can have mental properties such as knowledge, belief, intention, etc.

What is an Agent?

An agent can be anything that per active environment through sensors and act upon that environment through actuators. An Agent runs in the cycle of perceiving, thinking and acting.

An agent can be –

Human-Agent :

A human agent has eyes, ears, and other organs which work for senso and hand, legs, vocal tract work for actuators.

Robotic Agent :

A robotic agent can have cameras, infrared range finder, NLP for senso and various motors for actuators.

Software Agent :

Software agent can have keystrokes, file contents as sensory input a act on those inputs and display output on the screen.

Hence the world around us is full of agents such as thermostat, sell phone , camera and even we are also agents. Before moving forward, we should first know about sensors, effectors, and actuators.

Sensor:

Sensor is a device which detects the change in the environment and sends the information to other electronic devices. An agent observes its environment through sensors.

Actuators:

Actuators are the component of machines that converts energy into motion. The actuators are only responsible for moving and controlling a system. An actuator can be an electric motor, gears, rails, etc.

Effectors:

Effectors are the devices which affect the environment. Effectors can be legs, wheels, arms, fingers, wings, fins, and display screen.

Intelligent Agents

An intelligent agent is an autonomous entity which act upon an environment using sensors and actuators for achieving goals. An intelligent agent may learn from the environment to achieve their goals. A thermostat is an example of an intelligent agent.

Following are the main four rules for an all agent:

Rule 1: An AI agent must have the ability to perceive the environment.

Rule 2: The observation must be used to make decisions.

Rule 3: Decision should result in an action.

Rule 4: The action taken by an Al agent must be a rational action.

Rational Agent:

A rational agent is an agent which has clear preference, models uncertainty, and acts in a way to maximize its performance measure with all possible actions. A rational agent is said to perform the right things.

AI is about creating rational agents to use for game theory and decision theory for various real-world scenarios. For an AI agent., the rational action is most important because in AI reinforcement learning algorithm, for each best possible action, agent gets the positive reward and for each wrong action, an agent gets a negative reward.

Rationality:

The rationality of an agent is measured by its performance measure. Rationality can be agent.

- Performance measure which defines the success criterion.

- Agent prior knowledge of its environment. Best possible actions that an agent can perform.

- The sequence of percepts.

Structure of an AI Agent:

The task of Al is to design an agent program which implements the agent function. the structure of an intelligent agent is a combination of architecture and agent program.

1. Agent- Architecture + Agent program

Following are the main three terms involved in the structure of an Al agent:

Architecture: Architecture is machinery that an Al agent executes on.

Agent Function: Agent function is used to map a percept to an action.

1. fP*→A

Agent program: Agent program is an implementation of agent function. An agent program executes on the physical architecture to produce function The environment is the Task Environment (problem) for which the Rational Agent is the solution.

Any task environment is characterised on the basis of PEAS. PEAS is a type of model on which an AI agent works upon. When we define an Al agent or rational agent, then we can group its properties under PEAS representation model. It is made up of four words.

The content refers to components often used in robotics and systems control, abbreviated as “PEAS.” “P” stands for Performance measure, the criteria used to evaluate the success of a system’s actions. “E” represents Environment, the context or setting in which the system operates. “A” is for Actuators, the mechanisms that enable the system to act upon its environment. Lastly, “S” stands for Sensors, the devices that allow the system to receive information or feedback from its environment.

P: Performance measure

E: Environment

A: Actuators

S: Sensors

1. Performance – What is the performance characteristic which would either make the agent successful or not. For example, as per the previous example clean floor, optimal energy consumption might be performance measures.

2.Environment – Physical characteristics and constraints expected. For example, wood floors, furniture in the way etc

3.Actuators – The physical or logical constructs which would take action. For example for the vacuum cleaner, these are the suction pumps

4.Sensors – Again physical or logical constructs which would sense the environment. Fromour previous example, these are cameras and dirt sensors.

PEAS for self-driving cars for example :

Performance: Safety, time, legal drive, comfort

Environment: Roads, other vehicles, road signs, pedestrian

Actuators: Steering, accelerator, brake, signal, horn

Sensors: Camera, GPS, speedometer, odometer, accelerometer, sonar.

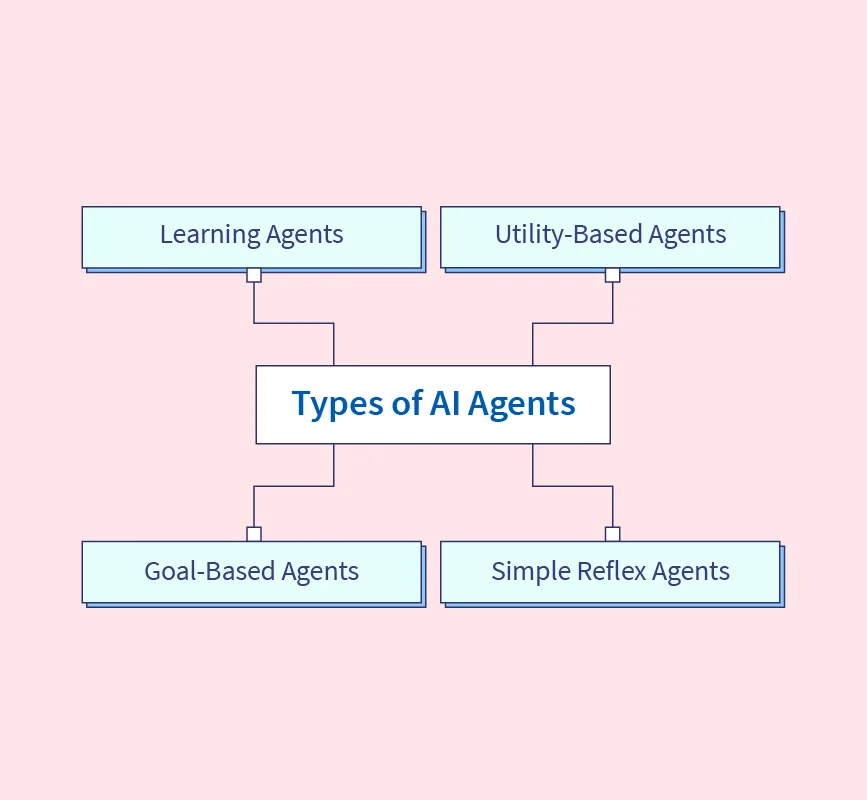

Types of AI Agents

Agents can be grouped into five classes based on their degree of perceived intelligence and capability. All these agents can improve their performance and generate better action over the time, These are given below.

- Simple Reflex Agent

- Model-based reflex agent

- Goal-based agents

- Utility-based agent

- Learning agent

1. Simple Reflex agent

- The Simple reflex agents are the simplest agents. These agents take decisions on the basis

- of the current percepts and ignore the rest of the percept history

- These agents only succeed in the fully observable environment.

- The Simple reflex agent does not consider any part of percepts history during their decision and action process.

- The Simple reflex agent works on Condition-action rule, which means it maps the current state to action. Such as a Room Cleaner agent, it works only if there is dirt in the room.

- Problems for the simple reflex agent design approach:

- They have very limited intelligence

- They do not have knowledge of non-perceptual parts of the current state

- Mostly too big to generate and to store.

- Not adaptive to changes in the environment.

2. Model-based reflex agent

- A The Model-based agent can work in a partially observable environment, and track the situation.

- A model-based agent has two important factors:

- Model: It is knowledge about “how things happen in the world.” so it is called a Model based agent.

- Internal State: It is a representation of the current state based on percept history

- These agents have the model, “which is knowledge of the world” and based on the model they perform actions.

- Updating the agent state requires information about:

- a. How the world evolves

- b. How the agent’s action affects the world.

3. Goal-based agents

- The knowledge of the current state environment is not always sufficient to decide for an agent to what to do.

- The agent needs to know its goal which describes desirable situations.

- Goal-based agents expand the capabilities of the model-based agent by having the “goal” information.

- They choose an action, so that they can achieve the goal.

- These agents may have to consider long sequence of possible actions before deciding whether the goal is achieved or not. Such considerations of different scenario are called searching and planning, which makes an agent proactive.

4. Utility-based agents

- These agents are similar to the goal-based agent but provide an extra component of utility measurement which makes them different by providing a measure of success at a given state.

- Utility-based agent act based not only goals but also the best way to achieve the goal.

- The Utility-based agent is useful when there are multiple possible alternatives, and an agent has to choose in order to perform the best action.

- The utility function maps each state to a real number to check how efficiently each action achieves the goals.

5. Learning Agents

- A learning agent in Al is the type of agent which can learn from its past experiences, or it has learning capabilities.

- It starts to act with basic knowledge and then able to act and adapt automatically through learning.

- A learning agent has mainly four conceptual components, which are:

- Learning element: It is responsible for making improvements by learning from environment.

- Critic: Learning element takes feedback from critic which describes that how well the agent is doing with respect to a fixed performance standard.

- Performance element: It is responsible for selecting external action

- Problem generator: This component is responsible for suggesting actions that will lead to new and informative experiences.

- Hence, learning agents are able to learn, analyze performance, and look for new ways to improve the performance.

Chatbots to attempt the Turing test:

ELIZA: ELIZA was a Natural language processing computer program created by Joseph Weizenbaum. It was created to demonstrate the ability of communication between machine and humans. It was one of the first chatterbots, which has attempted the Turing Test.

Parry: Parry was a chatterbot created by Kenneth Colby in 1972. Parry was designed to simulate a person with Paranoid schizophrenia(most common chronic mental disorder). Parry was described as “ELIZA with attitude.” Parry was tested using a variation of the Turing Test in the carly 1970s.

Eugene Goostman: Eugene Goostman was a chatbot developed in Saint Petersburg in 2001. This bot has competed in the various number of Turing Test. In June 2012, at an event, Goostman won the competition promoted as largest-ever Turing test content, in which it has convinced 299% of judges that it was a human. Goostman resembled as a 13- year old virtual boy.

The Chinese Room Argument

There were many philosophers who really disagreed with the complete concept of Artificial Intelligence. The most famous argument in this list was “Chinese Room.” In the year 1980, John Searle presented “Chinese Room” thought experiment, in his paper “Mind, Brains, and Program,” which was against the validity of Turing’s Test.

According to his argument, “Programming a computer may make it to understand a language, but it will not produce a real understanding of language or consciousness in a computer.

He argued that Machine such as ELIZA and Parry could easily pass the Turing test by manipulating keywords and symbol, but they had no real understanding of language. So it cannot be described as “thinking” capability of a machine such as a human.

Features required for a machine to pass the Turing test

- Natural language processing: NLP is required to communicate with Interrogator in general human language like English.

- Knowledge representation: To store and retrieve information during the test.

- Automated reasoning: To use the previously stored information for answering the questions.

- Machine learning: To adapt new changes and can detect generalized patterns.

- Vision (For total Turing test): To recognize the interrogator actions and other objects during a test.

- Motor Control (For total Turing test): To act upon objects if requested.